Keeping a close eye on your website's performance means constantly tracking key speed and responsiveness metrics. This isn't just about tinkering under the bonnet; it's about guaranteeing a fast, reliable experience for every single visitor. It’s a proactive process that helps you identify and fix issues before they ever impact your customers, protecting both your revenue and your search engine rankings.

Why Performance Monitoring Is Mission-Critical

Slow websites don't just frustrate people; they actively cost you money. In a world where every single millisecond counts, leaving your site's performance to chance is a massive gamble. Continuous monitoring shifts you away from a reactive "fix-it-when-it-breaks" approach to a proper, proactive strategy that actively safeguards your digital presence.

And it’s not just about keeping impatient visitors happy. Search engines like Google are explicit about using page speed as a ranking factor. A sluggish site can seriously harm your visibility, making it much harder for potential customers to find you in the first place.

The Real Cost of a Slow Website

The link between website speed and business success is direct, measurable, and often brutal. For an e-commerce store, just a one-second delay in page load time can cause a significant drop in conversions. For a publisher, it means higher bounce rates and less ad revenue. The impact ripples through every part of your business.

Think about these very real consequences:

- Lost Revenue: Shoppers will abandon their carts or simply leave before they even see your best offers.

- Damaged Brand Reputation: A slow, unreliable site just feels unprofessional and untrustworthy.

- Lower SEO Rankings: Google prioritises sites that deliver a fast, superior user experience. Simple as that.

- Reduced Customer Loyalty: First impressions are everything, and a poor performance will stop people from coming back.

To really get your head around this, it helps to understand the critical importance of website speed and the massive impact it has on user behaviour.

Meeting Modern User Expectations

User patience is thinner than ever. Projections show that by 2025, a staggering 53% of mobile users in the UK are likely to abandon a website if it takes more than 3 seconds to load. This stat, found on kanukadigital.com, paints a clear picture: consumer expectations for speed are only going up.

A proactive monitoring strategy allows you to catch performance regressions caused by new code deployments, traffic spikes, or third-party script changes before they affect a large portion of your audience.

Ultimately, performance monitoring is a core part of any healthy digital strategy. It gives you the hard data you need to make informed decisions that drive measurable growth and ensure your site remains stable and reliable. That's why this process is a fundamental part of our ongoing website maintenance and support services—it helps businesses protect their investment and maintain a real competitive edge.

Choosing Performance Metrics That Actually Matter

It’s easy to get lost in a sea of data. Trying to track every single metric available is a fast track to analysis paralysis, and it won't actually help you make your website any better.

Effective performance monitoring isn't about collecting mountains of data. It’s about focusing on the numbers that genuinely reflect what your visitors are experiencing and being able to tell the difference between actionable insights and simple "vanity metrics".

Vanity metrics, like total page views, might look good in a report but they don’t tell you why people are behaving a certain way. Actionable metrics, on the other hand, connect directly to the user experience. This is where Google's Core Web Vitals come in, giving us a standardised way to measure the real-world feel of a website.

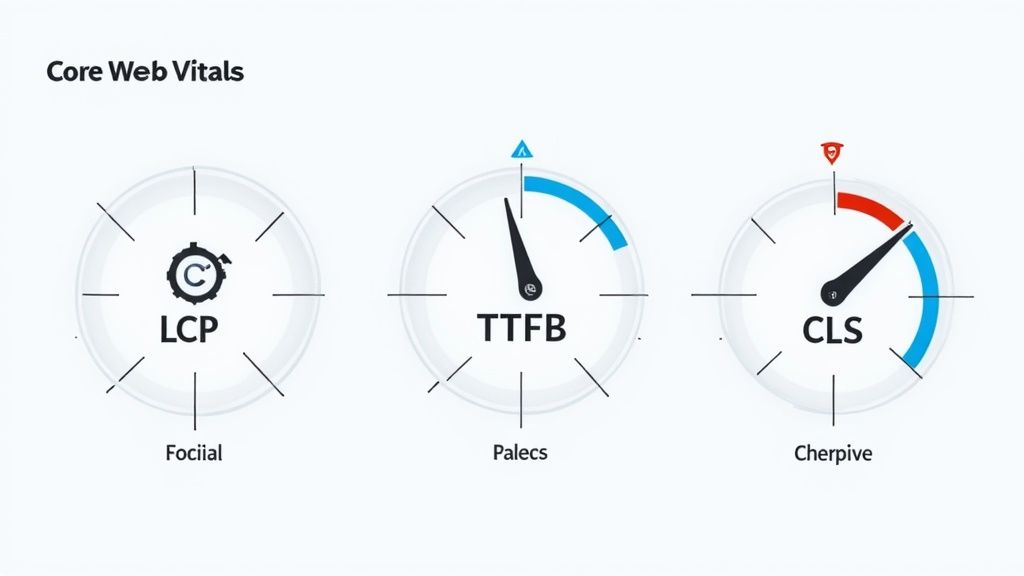

Focusing on Core Web Vitals

Google rolled out these metrics to give everyone a unified set of signals about the quality of a user's experience on a page. They’re not just technical jargon; they directly measure how a visitor perceives your site's speed, responsiveness, and visual stability.

Here’s a quick breakdown of what they actually mean:

- Largest Contentful Paint (LCP): This is all about perceived loading speed. It measures how long it takes for the largest image or text block to become visible. A good LCP score is crucial because it reassures the visitor that the page is actually working.

- Interaction to Next Paint (INP): This one’s all about responsiveness. INP measures the delay between a user's interaction (like clicking a button) and the browser actually showing a response. A low INP means your site feels snappy and interactive, not laggy.

- Cumulative Layout Shift (CLS): This tracks how visually stable your page is while it loads. A low CLS score means elements don't jump around unexpectedly, preventing those frustrating moments where someone tries to click a button, but it moves at the last second.

By prioritising these three metrics, you're not just optimising for search engines—you're focusing on the foundational elements that make a website enjoyable and easy to use. Great performance is a key part of how to improve your website's user experience.

Beyond the Core Vitals

While the Core Web Vitals are a brilliant starting point, they don't tell the whole story. Another critical metric to keep a close eye on is Time to First Byte (TTFB).

This measures how quickly your server responds to a request from a browser. Think of it as the time it takes for your website's server to even pick up the phone. A slow TTFB can be a dead giveaway of server-side issues, like inefficient code, slow database queries, or inadequate hosting, creating a bottleneck before your site even begins to render for the user.

These metrics give you a clear baseline for performance. Since 2025, data from Google’s Chrome User Experience Report shows the average UK website's LCP is 1.8 seconds on mobile and 1.6 seconds on desktop, putting the UK slightly ahead of global averages. As you can find out in this website speed statistics analysis from DebugBear, this user-centric data shows what real visitors are experiencing out in the wild.

The table below breaks down the most important metrics to watch, explaining what they measure and why they have such a big impact on your site's success.

Key Website Performance Metrics and Their Impact

| Metric (Abbreviation) | What It Measures | Why It Matters |

|---|---|---|

| Largest Contentful Paint (LCP) | The time it takes for the largest content element (e.g., an image or text block) to load on the screen. | Directly impacts perceived load speed. A slow LCP makes a site feel sluggish and can lead to high bounce rates. |

| Interaction to Next Paint (INP) | The latency of all user interactions (clicks, taps, key presses) with a page. | Measures real-world responsiveness. A low INP ensures the site feels quick and interactive, not frustratingly laggy. |

| Cumulative Layout Shift (CLS) | The total score of all unexpected layout shifts that occur during the entire lifespan of the page. | Gauges visual stability. A low CLS prevents annoying "jumping" content, which can cause users to misclick. |

| Time to First Byte (TTFB) | The time between the browser requesting a page and when it receives the first byte of information from the server. | Indicates server responsiveness. A high TTFB points to server-side problems that need fixing before any front-end optimisations can help. |

Ultimately, focusing on this curated set of metrics helps you cut through the noise and concentrate on changes that your users will genuinely feel.

Tailoring Metrics to Your Website's Goals

Of course, the metrics that matter most will also depend on your site's specific purpose. An e-commerce site and a content-heavy blog have very different priorities, and your monitoring should reflect that.

For an online shop, for example, metrics tied directly to conversions are vital. This includes the LCP of your main product images and, crucially, the INP of the "Add to Basket" button. A delay there can kill a sale.

For a blog, however, the focus might be on how quickly the main article content loads (LCP) and ensuring a smooth scrolling experience without any layout shifts (CLS) that could disrupt the reader. By aligning your website performance monitoring with your specific business goals, you move from just collecting data to gaining true clarity.

Building Your Performance Monitoring Toolkit

Right, you’ve picked out your key performance metrics. Now for the fun part: assembling the right set of tools to actually track them. Building a decent website performance monitoring toolkit isn't about throwing a load of money at the problem; it’s about a smart combination of services that gives you the full picture of your site's health.

The best way to think about this is to use two distinct types of monitoring: Synthetic and Real User Monitoring (RUM). It’s like the difference between a controlled lab test and genuine, real-world data.

- Synthetic Monitoring is basically sending out automated bots from servers all over the globe to regularly check up on your website. This is a brilliant, proactive way to check for uptime and catch any major issues in a controlled environment before your users ever see them.

- Real User Monitoring (RUM), which you’ll often see called field data, collects performance metrics directly from the browsers of your actual visitors. This shows you exactly how your site is performing for real people, on all their different devices, networks, and from various locations.

For a complete view, you absolutely need both. Synthetic monitoring tells you if your site is online and running as it should, while RUM reveals the true user experience, warts and all.

Getting Started with Free Monitoring Tools

You can get a surprisingly powerful setup going without spending a penny. Google Search Console, for instance, is non-negotiable. It gives you RUM data through its Core Web Vitals report, showing you how your pages are performing for actual Google Chrome users. You can even set it up to send email alerts when it spots new types of performance issues, like a sudden spike in pages with poor LCP scores.

For uptime, you need a dedicated service. The UK website performance monitoring market is growing fast, part of a global industry that’s projected to hit $15 billion by 2033. This growth has led to some fantastic free options for businesses. As a market analysis from Data Insights Market points out, UK companies often turn to services like StatusCake, which provides free uptime checks from UK locations including London and Manchester.

Here's a look at the kind of simple dashboard you can get for tracking your website’s status.

This kind of tool gives you an immediate, at-a-glance view of your site's availability, making sure you're the first to know if anything goes offline.

Combining Synthetic and Real User Data

A solid starter toolkit combines these free resources to cover all your bases.

- Google Search Console: This is your go-to for RUM data. Get into the habit of checking the Core Web Vitals report to understand the real-world user experience and pinpoint pages that need some optimisation work. Set up alerts so you're notified automatically about new issues.

- StatusCake (or similar): Set this up for synthetic uptime monitoring. Configure checks from a few different locations to run every 5-15 minutes. This ensures you get an instant alert via email or text if your website goes down.

By combining these tools, you create an automated system that flags both catastrophic failures (like downtime) and the more subtle performance dips that are quietly frustrating your visitors.

As you build out this toolkit, it's also worth thinking about how you can effectively monitor network traffic for more detailed insights into how resources are being loaded and where delays might be creeping in. This mix of uptime, real-user, and network-level data provides a robust foundation for keeping your website fast and reliable.

Finding and Fixing Performance Bottlenecks

Collecting performance data is one thing, but knowing what to do with it is where you can make a real difference. Once your monitoring tools start pinging you with reports and alerts, the next job is to dive in and translate those numbers into a clear, actionable plan.

This process is less about being a technical wizard and more about being a detective, piecing together clues to find exactly what’s slowing things down for your visitors.

Your first port of call is almost always a waterfall chart. Think of it as a visual breakdown of how a browser loads every single file on your page, from top to bottom. It shows you the order, size, and load time of each resource—HTML, CSS, JavaScript, and images. At first glance, it can look intimidating, but it’s brilliant for spotting obvious culprits.

Interpreting Waterfall Charts to Find Clues

A waterfall chart is essentially a timeline of your page loading. You’re looking for two things: long bars, which represent slow-loading files, and strange gaps, which can indicate network delays or processing hold-ups.

By scanning this chart, you can quickly spot common issues that create performance bottlenecks.

- Render-Blocking Resources: These are typically the CSS and JavaScript files loaded right at the top in the

<head>section of your HTML. They force the browser to stop everything and wait for them to download and process before it can show any content, leading to that dreaded blank screen for the user. - Slow Server Response Time (TTFB): If the very first bar on the chart (usually your main HTML document) is suspiciously long, it points to a slow Time to First Byte. This means your server is taking too long to even start sending information, which could be down to anything from inefficient code to poor hosting.

- Unoptimised Assets: Are there huge image files taking seconds to download? Large, uncompressed images are one of the most frequent—and easily fixable—causes of a slow website.

The visual below shows how to set up your monitoring system to begin collecting this kind of valuable data in the first place.

This graphic really just shows that a structured approach—picking tools, defining metrics, and setting alerts—is the foundation for any effective analysis.

A Real-World Scenario: The Slow Product Page

Let’s imagine you run an e-commerce site. You get an alert that your best-selling product page has a poor Largest Contentful Paint (LCP) score of 4.2 seconds. Your visitors are waiting over four seconds just to see the main product image, which is a total disaster for conversions.

You open up a waterfall chart for that specific page and immediately notice a few things:

- A large JavaScript file for a third-party review widget is blocking the page from rendering for almost a full second.

- The main product photo is a massive 2.1 MB PNG file, taking nearly two seconds to download all on its own.

- There's a long request chain where one CSS file calls another CSS file, which then finally loads a critical font file, delaying text from appearing.

In this single chart, you’ve moved from a vague problem ("the page is slow") to a specific diagnosis with clear causes. You haven’t fixed anything yet, but you know exactly where to start.

This analysis connects the poor LCP metric directly to its root causes. The oversized image is the primary culprit for the slow LCP, while the render-blocking JavaScript and tangled CSS chain are making the entire experience feel sluggish. Armed with this information, you can now build a targeted optimisation plan instead of just guessing.

Optimising Your Site and Measuring the Results

You’ve analysed the performance reports and found the exact bottlenecks slowing your site down. Now for the good bit: taking decisive action. The goal here isn’t just to make a few small tweaks; it’s about rolling out high-impact changes that your visitors will genuinely feel.

This is the moment your website performance monitoring setup proves its worth. It shifts from being a diagnostic tool to becoming your yardstick for success.

Without a baseline, you’re just guessing. Before you touch a single line of code, get a clear snapshot of your current metrics. Jot down your LCP, INP, and TTFB scores. This initial data is your benchmark—the crucial "before" picture that lets you demonstrate the real return on your optimisation efforts.

High-Impact Optimisation Techniques

With your baseline established, you can laser-focus on the fixes that deliver the biggest performance gains. Instead of getting lost in minor adjustments, concentrate on the most common and effective solutions for the problems you’ve found. This is where you find the low-hanging fruit of performance work.

Some of the most effective strategies include:

- Image Compression and Modern Formats: Large, unoptimised images are a classic cause of slow load times. Compressing your JPEGs and PNGs is a great start, but converting them to modern formats like WebP or AVIF is even better, offering superior quality at a much smaller file size.

- Minifying CSS and JavaScript: Minification is a fancy word for stripping out all the unnecessary characters from your code files (like white space and comments) without changing how they work. This makes the files smaller and much faster for browsers to download and process.

- Leveraging a Content Delivery Network (CDN): A CDN is a game-changer. It stores copies of your site's assets (images, CSS, JS) on servers located all over the world. When a user visits your site, these assets are served from the server closest to them, which dramatically reduces latency.

These foundational fixes can have a huge effect on your site's speed. For a deeper dive, our guide on how to improve website speed provides more detailed, actionable steps you can take right now.

Tying Fixes to Common Problems

When your monitoring tools flag an issue, knowing the most likely fix saves you a ton of time. This table matches common performance problems with their most effective solutions, giving you a quick reference for where to start.

| Identified Problem | Potential Cause | High-Impact Solution |

|---|---|---|

| High LCP (Largest Contentful Paint) | A large, unoptimised hero image or video above the fold. | Aggressively compress the image or convert it to a modern format like WebP. Consider lazy-loading below-the-fold images. |

| High INP (Interaction to Next Paint) | Heavy JavaScript tasks blocking the main browser thread. | Minify and defer non-critical JavaScript. Break up long tasks into smaller chunks. |

| High TTFB (Time to First Byte) | Slow server response, complex database queries, or no caching. | Implement page caching. Upgrade your hosting plan. Use a CDN to reduce server load. |

| Poor Core Web Vitals score | A combination of slow loading, poor interactivity, and layout shifts. | Address LCP, INP, and CLS (Cumulative Layout Shift) issues individually. Start with image and code optimisation. |

This isn't an exhaustive list, but it covers the big hitters that cause the most headaches. Focusing your efforts here will almost always deliver the most noticeable improvements for your users.

Measuring the Impact of Your Changes

After implementing your optimisations, the job is only half done. Now it's time to head back to your monitoring tools to measure the results. Run the exact same performance tests you did to establish your baseline and compare the new metrics against the old ones.

Did your LCP score drop from a worrying 4 seconds to a healthy 2.2 seconds? Has your bounce rate on key landing pages decreased? This is the tangible proof that your hard work is paying off.

This "measure, optimise, repeat" cycle is the very heart of effective website performance monitoring. It transforms performance from a one-off task into an ongoing process of continuous improvement, making sure your site stays fast and reliable as it evolves. By connecting your technical optimisations to real business outcomes, you can clearly see the value of maintaining a high-performing website.

Common Questions About Website Performance

Even with a solid monitoring plan, a few questions always seem to pop up. Let's tackle some of the most common ones to clear up any lingering confusion about keeping your website in top shape.

Is It Better to Use Lab Data or Field Data?

One of the most frequent questions I get is whether to trust lab data (from tools like PageSpeed Insights) or field data, which you might know as Real User Monitoring (RUM). The honest answer? You need both. They tell you two different, equally important sides of the same story.

- Lab Data: This is your controlled experiment. It’s collected in a consistent environment with the same device and network settings every time. That makes it perfect for debugging and testing changes because you get repeatable results. Think of it as a clean, scientific test.

- Field Data (RUM): This is the real world. It comes from your actual visitors using all sorts of different devices, browsers, and internet connections. This is what reflects your audience's genuine experience, and it's what Google looks at for its Core Web Vitals assessment.

Lab data is brilliant for identifying a specific technical problem, while field data tells you how that problem is actually impacting your users. A smart strategy uses lab tests to diagnose and fix things, then uses field data to confirm that the fix actually worked for real people.

How Often Should I Check My Website Performance?

Checking your site's performance shouldn't be a random task you remember to do every six months. For most businesses, a regular rhythm is what works best.

A weekly check-in is a great starting point. It's frequent enough to spot problems before they get out of hand but not so often that it becomes a chore. You can quickly review your Core Web Vitals report in Google Search Console and glance over your uptime logs.

Of course, if you’ve just launched a new feature or you're running a big marketing campaign that's driving a spike in traffic, you’ll want to keep a much closer eye on things for a few days.

Ultimately, your automated alerts should be doing most of the heavy lifting, letting you know the second something critical breaks.

Will Performance Monitoring Slow Down My Website?

This is a really valid concern. The short answer is no, not if it’s set up correctly.

Modern performance monitoring tools are designed to be incredibly lightweight. Synthetic monitoring, for example, tests your site from external servers, meaning it has zero impact on your actual users.

Real User Monitoring (RUM) scripts are also highly optimised. They are typically tiny, load asynchronously (so they don’t get in the way of your page rendering), and have a negligible effect on performance. The valuable insights you get from them are essential for keeping your site fast and far outweigh the minuscule processing time they might add.

A fast, reliable website is non-negotiable for business success. At Altitude Design, we build high-performance, hand-coded websites from the ground up, ensuring your business makes a brilliant first impression. Learn more about our approach at https://altitudedesign.co.uk.